Funding: National Science Foundation: Cyberlearning EXP 2013-16 ($558,000, with UC Davis)

Overview

All students need to understand the mathematical concepts of fractions, rate, and proportion. Proportional reasoning, in particular, is essential for scientific and engineering practices and has many everyday practical applications. But as any primary-school student or teacher will tell you, these concepts can be difficult to learn.

- One way to approach this instructional problem is to step back and ask how people learn concepts to begin with. For centuries it has been recognized that people learn through interacting with the world. When computers entered educational practice, they provided limited forms of interacting with the world, because the user-interfaces were not yet sophisticated enough to enable students to interact in natural or naturalistic ways. But now we have user-interfaces such as Wii, Kinect, Leap Motion, and touchscreen tablets and smartphones, we have tangible technology, and augmented and virtual reality. This range of platforms is once again allowing educational designers to build activities in which students interact with objects. Often these are virtual objects, but they elicit and shape essentially the same perceptual and action patterns — what we call sensorimotor schemes — as what we find with concrete objects. Still, these are new forms of technology, and we’re all figuring out what all this might mean for learning, for example learning mathematics. Ok, so students can learn through interacting with virtual objects. What about teachers?

- Whereas students can use educational software no matter where they are, just as long as they have a computer, you don’t just find teachers wherever you are. The problem is more complicated for interactive spaces where you need to manipulate the environment in particular ways, because the feedback students need in these activities goes beyond words. One idea is to create virtual teachers and put them into the same spaces. What should these virtual teachers be able to do?

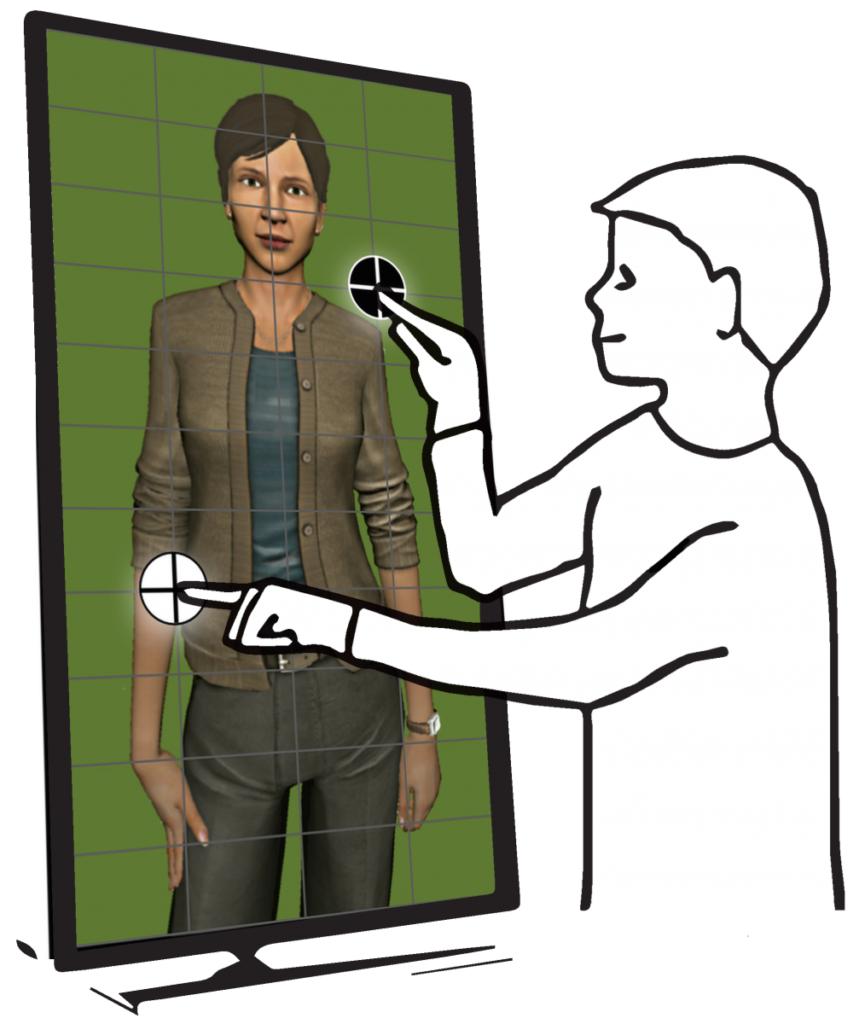

- We probably want a teacher to be able to speak. That way, students can focus on objects and listen to the teacher, without needing to read the information. What else? A growing body of research supports the claim that when teachers teach, they not only speak about the content but they also move their hands in ways that are meaningful and important, what we call “gesture.” And if kids are learning through interacting with objects, perhaps the virtual teachers should be able to intervene with these interactions, such as by showing the students how to manipulate the virtual objects. So the virtual teachers should be able themselves to manipulate the virtual objects and gesture about these actions. How do we do that?

- Animation software now enables us to create virtual characters that move their hands in ways that look quite naturalistic. Still, how might this virtual teacher know when to do what? The virtual teacher needs a virtual brain!

- State-of-the-art artificial intelligence is now enabling students to receive customized feedback from software as they interact with technology, and so building this brain would enable the virtual teacher to speak, gesture, and act intelligibly, working with individual students in real time, responding in ways that optimize for learning.

- All these innovations have created an opportunity to design an interactive human-like teacher or “pedagogical agent” that intelligently and responsively supports the mathematical learning of students as they engage in interactive work with a computer interface. Meet Maria!

Ok, no more mister nice guy. Now for formal speak.

Intellectual Merit: Digital media offer potential affordances for democratizing access to quality mathematics instruction. However, the media’s effectiveness depends on Learning Sciences research into expert teachers’ instructional practices (WHAT to program into the software) and virtual agent technological capabilities (HOW to emulate these practices digitally). This proposal offers to make progress on both fronts synergistically. When teachers communicate about mathematical concepts, they use the gesture modality spontaneously to provide critical spatial-dynamical complements to verbal and symbolic utterance, for example in explaining the idea of slope. And yet these gestures have historically been invisible to researchers, due to: (a) the pervasive epistemological fallacy that mathematical knowledge resides in static symbolic inscriptions rather than in multimodal cognitive processes; and (b) the enduring analytical challenges presented by the gesture phenomenon that impede encoding it for modeling and replication. Consequently, attention to gesture is mostly absent in academic and popular discourse about teacher practice and preparation. Unsurprisingly, then, digital simulations of teachers are absent of gesture, and thus virtual pedagogical agents still fall short of emulating human tutor discursive capability, to the detriment of students’ content comprehension. Two recent developments in research– (1) LS insight into pedagogical gesture, and (2) animation breakthroughs in gesture algorithms—create an exciting opportunity for a Cyberlearning interdisciplinary collaboration on designing interactive cognitive tutors with multimodal capability. This project seeks to build and evaluate an embodied-agentbased mathematics learning environment that supplements tutorial interaction with gestures that are realistically executed as well as naturalistically timed vis-à-vis students’ multimodal actions and speech contributions. The design will seamlessly integrate the Mathematical Imagery Trainer for Proportion (MITP), an empirically researched embodied-learning device, with a gesturing pedagogical agent. Through designing gesture-generation algorithms that are deeply grounded in gesture and learning research, we hope to significantly improve the performance of pedagogical agents in the mathematics domain, while also developing general guidelines for generating agent gesture. Working with middle-school participants, initially as individuals and pairs and then scaling up to classroom implementations, we will experiment with a range of both interface designs (Wii-mote vs. multi-touch-screen) and agent gestural interaction patterns (e.g., pointing, representing, miming, coaching) to obtain a deeper understanding of how virtual agent embodiment impacts student learning. In turn, theoretical advances will also inform future development of pedagogical digital media across the curriculum. Finally, through the process of simulating expert teachers’ behaviors, we will gain deeper understandings of these behaviors and in particular teachers’ interactive use of multimodality. Via design-based empirical research cycles of implementation, analysis, and refinement, we hope to deliver: (i) technology: software and principles for augmenting pedagogical agents with new gesture-enriched capabilities; and (ii) theory: insights into the nature, types, and roles of gesture in educational interaction. Our team is multidisciplinary, with an LS investigator bringing expertise in mathematics education, embodied cognition, and design-based research, and a computer science investigator with expertise in the engineering and research of animated virtual agents with gesture capability.

Broader Impacts: Despite consistent reform efforts, US mathematics students still straggle behind their global peers. Digitally administered quality instruction offers potential responses to this predicament, yet only to the extent that its design builds on research into effective teaching and leverages computation to simulate this expertise. And yet currently available digital media, broadly speaking, are diluted rather than enhanced versions of effective teaching, with online options including non-interactive videotaped lessons, individualized conversation-based interactions with human tutors, and non-gesturing interactive AI tutors. By infusing interactive agent tutors with naturalistic, domain appropriate gesture, the proposed media will provide the missing link toward massive distribution of naturalistic tutorial interaction. At the same time, theoretical insights into pedagogical gesture could be leveraged in creating new content and activities for pre/in-service teachers participating in professional development. The proposing laboratories will proactively disseminate their theoretical work and design innovation via publishing in leading journals, participating in conferences and workshops, and hosting visiting scholars. These laboratories are diverse, collaborative collectives dedicated to training the next generation of LS/CS researchers.

Publications

Pardos, Z. A., Rosenbaum, L. F., & Abrahamson, D. (2021). Characterizing learner behavior from touchscreen data. International Journal of Child-Computer Interaction, 100357. https://doi.org/10.1016/j.ijcci.2021.100357

ABSTRACT: As educational approaches increasingly adopt digital formats, data logs create a newfound wealth of information about student behaviors in those learning environments for educators and researchers.Yet making sense of that information, particularly to inform pedagogy, remains a significant challenge. Data from digital sensors that sample at the millisecond level of granularity, such as computer mouses or touchscreens, is notoriously difficult to computationally process and mine for patterns. Adding to the challenge is the limited domain knowledge of this biological sensor level of interaction which prohibits a comprehensive manual feature engineering approach to utilize those data streams. In this paper, we attempt to enhance the assessment capability of a touchscreen-based tutoring system by using Recurrent Neural Networks (RNNs) to predict students’ strategies from their 60hz data streams.We hypothesize that the ability of neural networks to learn representations automatically, instead of relying on human feature engineering, may benefit this classification task. Our classification models(including majority class) were trained and cross-validated at several levels on historical data which had been human coded with learners’ strategies. Our RNN approach to this difficult classification task moderately advances performance above logistic regression. We discuss the implications of this performance for enabling greater tutoring system autonomy. We also present visualizations that illustrate how this neural network approach to modeling sensor data can reveal patterns detected by the RNN. The surfaced patterns, regularized from a larger superset of mostly uncoded data, underscore the mix of normative and seemingly idiosyncratic behavior that characterizes the state space of learning at this high frequency level of observation.

Pardos, Z. A., Hu, C., Meng, P., Neff, M., & Abrahamson, D. (2018). Classifying learner behavior from high frequency touchscreen data using recurrent neural networks. In D. Chin & L. Chen (Eds.), Adjunct proceedings of the 26th Conference on User Modeling, Adaptation and Personalization (UMAP ’18). Singapore: ACM. 6 pages

ABSTRACT: Sensor stream data, particularly those collected at the millisecond of granularity, have been notoriously difficult to leverage classifiable signal out of. Adding to the challenge is the limited domain knowledge that exists at these biological sensor levels of interaction that prohibits a comprehensive manual feature engineering approach to classification of those streams. In this paper, we attempt to enhance the assessment capability of a touchscreen based ratio tutoring system by using Recurrent Neural Networks (RNNs) to predict the strategy being demonstrated by students from their 60hz data streams. We hypothesize that the ability of neural networks to learn representations automatically, instead of relying on human feature engineering, may benefit this classification task. Our RNN and baseline models were trained and cross-validated at several levels on historical data which had been human coded with the strategy believed to be exhibited by the learner. Our RNN approach to this difficult tasks moderately advances performance above baselines and we discuss what implication this level of assessment performance has on enabling greater autonomy in the tutoring system. We further delve into this neural network approach to modeling sensor data by exploring the nature of patterns detected by the RNN through visualizations of their hidden states. The surfaced patterns, regularized from a larger superset of mostly uncoded data, underscores the mix of normative and seemingly idiosyncratic behavior that characterizes the state space of learning at this level of observation.

Abdullah, A., Adil, M., Rosenbaum, L., Clemmons, M., Shah, M., Abrahamson, D., & Neff, M. (2017). Pedagogical agents to support embodied, discovery-based learning. In J. Beskow, C. Peters, G. Castellano, C. O’Sullivan, I. Leite, & S. Kopp (Eds.), Proceedings of 17th International Conference on Intelligent Virtual Agents (IVA 2017) (pp. 1-14). Cham: Springer International Publishing.

ABSTRACT: This paper presents a pedagogical agent designed to support students in an embodied, discovery-based learning environment. Discovery-based learning guides students through a set of activities designed to foster particular insights. In this case, the animated agent explains how to use the Mathematical Imagery Trainer for Proportionality, provides performance feedback, leads students to have different experiences and provides remedial instruction when required. It is a challenging task for agent technology as the amount of concrete feedback from the learner is very limited, here restricted to the location of two markers on the screen. A Dynamic Decision Network is used to automatically determine agent behavior, based on a deep understanding of the tutorial protocol. A pilot evaluation showed that all participants developed movement schemes supporting proto-proportional reasoning. They were able to provide verbal proto-proportional expressions for one of the taught strategies, but not the other.

Flood, V. J., Neff, M., & Abrahamson, D. (2016, July). Animated-GIF libraries for capturing pedagogical gestures: An innovative methodology for virtual tutor design and teacher professional development. Paper presented at the 7th annual meeting of the International Society for Gesture Studies, Paris, July 18-22.

ABSTRACT: We report on a novel approach for archiving repertoires of multimodal pedagogical techniques using animated Graphic Interchange Format (GIF) files. This methodology was developed as part of an interdisciplinary design collaboration between a team of learning scientists (LS-team) and computers scientists (CS-team) working to build a virtual, animated tutor capable of multimodal communication for an interactive computer-based mathematics learning system.

Flood, V. J., Neff, M., & Abrahamson, D. (2015). Boundary interactions: Resolving interdisciplinary collaboration challenges using digitized embodied performances. In O. Lindwall, P. Häkkinen, T. Koschmann, P. Tchounikine, & S. Ludvigsen (Eds.), “Exploring the material conditions of learning: opportunities and challenges for CSCL,” the Proceedings of the Computer Supported Collaborative Learning (CSCL) Conference (Vol. 1, pp. 94-101). Gothenburg, Sweden: ISLS.

ABSTRACT: Little is known about the collaborative learning processes of interdisciplinary teams designing technology-enabled immersive learning systems. In this conceptual paper, we reflect on the role of digitally captured embodied performances as boundary objects within our heterogeneous two-team collective of learning scientists and computer scientists as we design an embodied, animated virtual tutor embedded in a physically immersive mathematics learning system. Beyond just a communicative resource, we demonstrate how these digitized, embodied performances constitute a powerful mode for both inter- and intra-team learning and innovation. Our work illustrates the utility of mobilizing the material conditions of learning.

Flood, V. J., Schneider, A., & Abrahamson, D. (2015, April). Moving targets: Representing and simulating choreographies of multimodal pedagogical tactics for virtual agent mathematics tutors. Paper presented at the annual meeting of the American Educational Research Association, Chicago, April 16-20.

ABSTRACT: A team of UC Berkeley Learning Scientists (Abrahamson, Director) and UC Davis Computer Scientists (Neff, Director) are collaborating on an NSF Cyberlearning EXP project, “Gesture Enhancement of Virtual Agent Mathematics Tutors.” The UCB team has been generating a library of pedagogical gestures for the UCD team to simulate virtually, based on motion capture. Our gesture-archiving effort yielded unexpected insights: (1) analysis of this library sensitized us to aspects of our own multimodal pedagogical communication, such as gaze, that we had overlooked in prior microgenetic modeling; and (2) an animated library of multimodal pedagogical tactics could serve in teachers’ professional training and development.

Flood, V. J., Schneider, A., & Abrahamson, D. (2014). Gesture enhancement of a virtual tutor via investigating human tutor discursive strategies: Forms and functions for proportions. In J. L. Polman, E. A. Kyza, D. K. O’Neill, I. Tabak, W. R. Penuel, A. S. Jurow, K. O’Connor, T. Lee, & L. D’Amico (Eds.), Proceedings of “Learning and Becoming in Practice,” the 11th International Conference of the Learning Sciences (ICLS) 2014 (Vol. 3, pp. 1593-1594). Boulder, CO: International Society of the Learning Sciences.

ABSTRACT: We examine expert human mathematics-tutor gestures in the context of an interactive design for proportionality in order to design a virtual pedagogical agent. Early results implicate distinct gesture morphologies serving consistent contextual functionalities in guiding learners towards quantitative descriptions of proportional relations.